ABC of Application Architecting on AWS – Series – Part B: Computing

![]() This blog post is the second in a series of three posts on setting up your own application infrastructure on AWS. The three encompass Networks, Computing, and Storage in the respective order. This post is about Computing and there’s a dedicated chapter for each building block and a ton of other useful information! Stay tuned!

This blog post is the second in a series of three posts on setting up your own application infrastructure on AWS. The three encompass Networks, Computing, and Storage in the respective order. This post is about Computing and there’s a dedicated chapter for each building block and a ton of other useful information! Stay tuned!

The AWS building blocks you will learn after having read this post:

EC2 Virtual Machines, EC2 Load Balancers (ELB/ALB/NLB), Auto-Scaling Groups (ASG), Elastic Container Service (ECS), Fargate launch type, and Lambdas.

What can you do with this information?

You can choose the ideal type of compute instance for parts of your application, when you know the key differences and benefits. Instances vary from most configurable to least configurable, coming with more pre-set options (and in optimal cases “boilerplate work is done for you”) along the way. Generally speaking, the less work is done for you, the cheaper it is.

AWS’ EC2 (short for Elastic Compute Cloud) refers to a platform that can be used to launch a virtual machine at will. This platform is then used by all other AWS’ services that provide compute instances. ECS (short for Elastic Container Service) allows you to launch containers either in your self-managed group of EC2 virtual machines (called “ECS Cluster”), or by using “Fargate launch type” where AWS dynamically creates and scales the ECS Cluster for you (though for a higher price than a self-managed cluster).

This same pricing behavior is reflected in other AWS’ PaaS (Platform-as-a-Service) offerings. For example, RDS (Relational Database Service) which allows you to deploy a Relational Database on a subnet of your choice, is technically equivalent to launching your own EC2 virtual machine and setting up that kind of a Relational Database there. However the updates are performed for you, point-in-time restorations are available, and support is available when the server crashes and the database is down (AWS will make sure it’s restored).

So in brief, you get to know what you’re paying for, and what are the limitations.

Parts of AWS Computing block by block

EC2 Virtual Machines

Virtual machines are the foundational part of AWS computing services, and always reside in a selected region. All other computing services are built on top of EC2 virtual machines. To launch your own virtual machine in a region, at minimum you’ll have to:

- choose AMI (Amazon Machine Image - like an operating system installation image)

- and instance type (pre-set CPU & RAM & storage options & networking options)

Leaving everything else to default will launch the instance in a random Subnet of the region’s Default VPC, with Default Security Group attached to it. It’s highly advisable to configure all these options oneself as explained in the previous post.

Setting up a virtually machine on AWS is like virtual building and setting up a PC.

Elastic Load Balancing

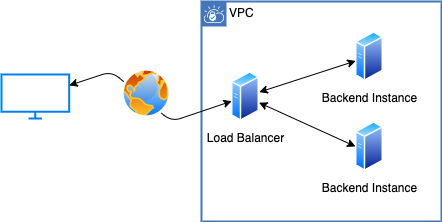

Load Balancing is a method to have a compute instance (with its known public IP address) forwarding requests from the internet to other compute instances (with internal IP addresses) that actually process the requests. The load balancer is also often used for “SSL/TLS Termination” where traffic from the internet to the load balancer is encrypted via HTTPS, and from there onwards (to instances that process requests) traffic is delivered unencrypted via HTTP. However, the main purpose of load balancing is to enable a (scalable) number of processing instances to respond to requests. This way if there’s too much traffic (= demand), you could add compute instances behind the load balancer (= supply) to be able to process all the requests.

Load Balancer (both ALB and NLB) distributes traffic to a scalable number of instances.

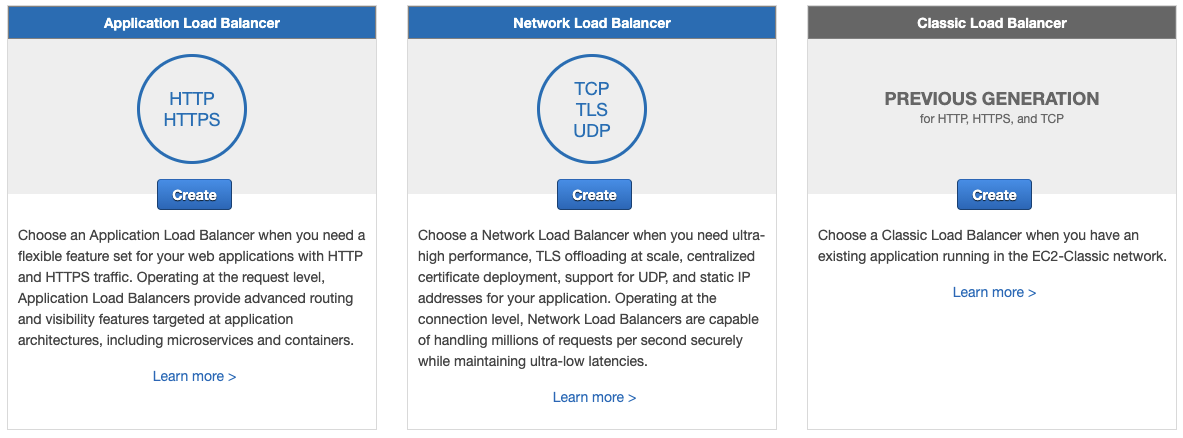

AWS provides a managed load balancing service called Elastic Load Balancing, which consists of two alternative services: Application Load Balancer (ALB) and Network Load Balancer (NLB). Originally in 2009 it had only what is nowadays called “Classic Load Balancer”, but that’s nowadays deprecated and there are good reasons not to use it. Both services support SSL/TLS Termination.

Application Load Balancer operates on network requests’ “application layer” [1] (HTTP, HTTPS, gRPC [2]) and thus you can route requests to “backend” compute instances based on HTTP headers, URL paths, and other request’s content based information.

Network Load Balancer on the other hand operates on network requests’ “transport layer” [1] (TCP, UDP) and thus is configurable to route requests based on the protocol (UDP/TCP) and port address. Since NLB only cares about port + protocol mapping, requests pass almost instantaneously to the instances in the same region - since data centers (AZs) within the same region are interconnected with high-bandwidth low-latency networking. [3]

How ALB, NLB, and Classic Load Balancer are displayed in AWS console.

Nothing stops you from setting up your own EC2 compute instance, and installing open source software such as HAProxy or Nginx to perform load balancing. The downside to this is having to maintain these oneself (for system updates and any issues that may arise). Technically these could do equivalent to what ALB does.

Auto-Scaling Groups

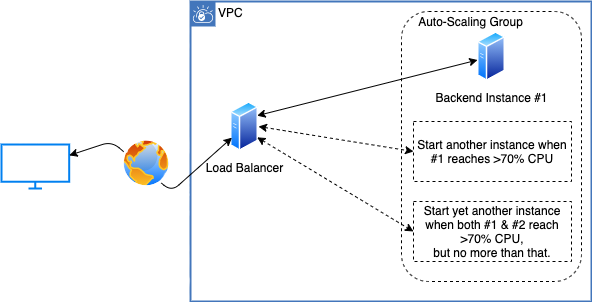

Auto Scaling refers to a configuration, stating a number of identical compute instances that must exist at any given time. This number can be static or dynamic (depending on for example CPU load the instances currently bear - or the day of the week). Scaling resources like this are often referred to as “horizontal scaling” or alternatively “scaling out”. AWS’ Auto-Scaling Group service is often used together with Elastic Load Balancing, to provide a number of identical compute instances as the load balancer’s target group.

Instances can be dynamically (horizontally) scaled, based on processed traffic.

Configuring AWS’ Auto-Scaling group requires a “launch template” (specification of an EC2 virtual machine), “minimum size” (number of instances), and “maximum size” (number of instances). These minimum and maximum sizes act as hard limits for the scaling behavior. Optionally, it supports a static number of instances called “desired capacity”. The static number may be manually set by an administrator (resulting in a resize to newly defined capacity), or be configured to change to specific capacity on specific recurring moments (defined as cron expressions [4]). Alternatively to the desired capacity, it supports an association to instance’s metrics (e.g. CPU) triggering a “scaling policy” (a conditional resize when the metrics change).

Elastic Container Service

Elastic Container Service (abbreviated ECS) is built on top of EC2 Virtual Machines. It’s intended for launching containerized applications in units called “services”, and launching a service in ECS is conceptually similar to launching a virtual machine in plain EC2.

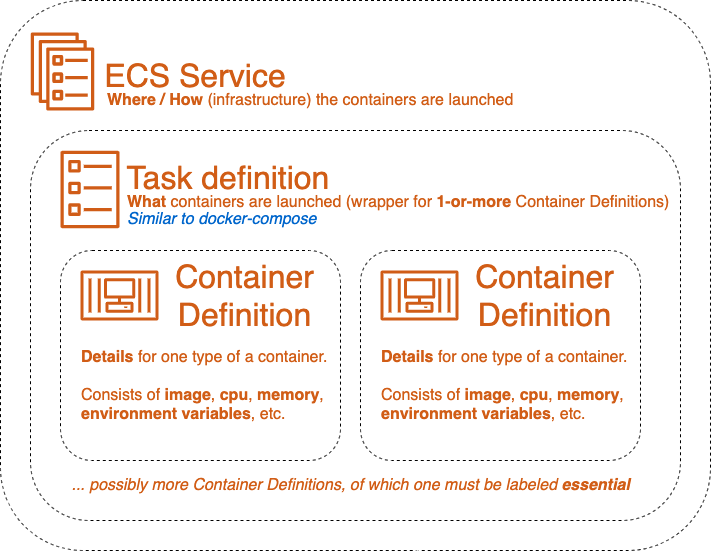

A service is linked to a task definition, and a task definition includes container definitions. Container definitions specify all container level details such as image, portMappings, environment, and any other parameters one might pass when using `docker run` command. Using this analogy to Docker, a task definition could be treated as a docker-compose file, containing one or more related containers. There’s even an existing integration between these tools.[5] [6] Service then includes additional metadata on “where to launch these containers”, “how to launch them”, “how many copies of the service are created”, possible relation to Elastic Load Balancer, and so on.

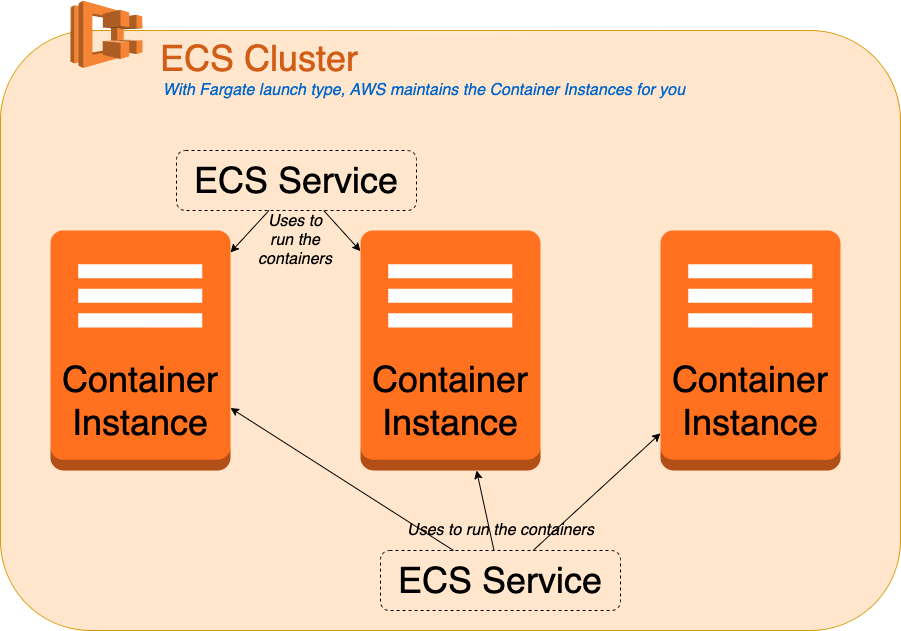

Unlike in EC2, you have to have an existing “ECS Cluster” in order to launch a service in ECS. Every region includes a default ECS Cluster, but it’s good practise to group services in clusters. ECS Cluster defines the available infrastructure (so called “Container Instances”) for service(s). However, the only required parameter for ECS Cluster is a name. Further parameters depend on the launch types used for the service, of which “FARGATE” launch type doesn’t require any more definitions (since AWS will internally create any necessary infrastructure “on fly”). Alternatively, when using “EC2” launch type for a service in a cluster, you’ll have to associate the cluster with an auto-scaling group (defined as one of its “capacity providers”). [7]

For container image references (within container definitions) any container registry can be used, for example, DockerHub. AWS Elastic Container Repository is a common choice, but even non-AWS repositories with custom authentication can be used (e.g. private DockerHub).[8] [9]

Fargate launch type

Fargate is essentially an alternative way to launch applications within ECS (or EKS, Elastic Kubernetes Service that’s similar to ECS but more complex). Whereas you could allocate your own Container Instances within ECS Cluster and launch your application into those, Fargate removes the need to create Container Instances in the first place. Using Fargate launch type the allocation of Container Instances is processed by AWS for you.

A common reason for using Fargate is reducing administration costs (worktime) of managing Container Instances. The downside is that Fargate-launched containers are more expensive to run (on an hourly vCPU/memory basis) compared to equivalent self-administrated EC2 Container Instances. However, usually Container Instances are not precisely optimized to 100 % used capacity, whereas Fargate-launched containers only cost for the resources used. Additionally there exist Saving Plans for committed (“minimum”) vCPU/memory Fargate usage, varying between roughly 15 % to 50 % depending on the period (1 or 3 years) and whether paid upfront. [10]

Lambda

Lambda is AWS’ “Function as a Service” offering, which (as the name implies) provides programmable processing power in the size of a single function. This can be contrasted with Elastic Container Service which provides processing power for containerized applications. Providing processing power per function allows scaling horizontally small-granularity bottlenecks in systems, and nothing prevents combining AWS Lambdas together with ECS or EC2 Virtual Machines, in order to move highly variable background tasks to concurrently running functions.

With regards to input, a key difference between Lambda and ECS Containers from a web-development perspective is that lambdas cannot have their ports exposed to the internet (nor can they have a static IP address). Instead, lambdas are launched by providing them with an event from an event source, and this event data is passed as a parameter to the function. Depending on the event source, this event data may arrive in various formats. In typed languages (such as TypeScript), you can either specify your own typings using AWS’ documentation for a specific event source [11] [12] or use a community-maintained package [13]. Event sources include for example AWS’ API Gateway service for HTTP endpoints, AWS’ CloudWatch Rule for scheduled launches, and AWS-SDK for programmatic launch.

With regards to output, if the lambdas were launched synchronously they’re expected to return a value (format again depending on event source - same with typings), and if they were launched asynchronously they can return nothing/null/void.

Lambda can be written in one of many languages (to date, March 2021, 7 languages) specified by “runtime” parameter, and technically you could implement your own runtime for an otherwise non-supported language. Based on two monitoring service providers, the most common runtimes are currently Python and NodeJS. [14] [15]Runtimes provided by AWS include the AWS-SDK package/library by default (in addition to any standard libraries), but any additional packages have to be included along with the function code during deployment. Lambdas can generally access the internet for communicating with APIs and fetching data (unless they’re configured to launch in an Isolated Subnet - you can check the previous post to know what is that).

There are several ways to deploy a lambda, but the most common is to create a zip archive consisting of file with function and any third-party dependencies, then uploading it together with necessary metadata (most importantly the function’s filename and function name) either using AWS CLI or through AWS Console. [16]

Notable limitations for AWS Lambda functions are cold start and service quota limits on concurrency. Cold start refers to the time it takes for lambda to start up and register the event. Since under-the-hood lambda is one or more containers (=runtime) that execute the custom function body, AWS is keeping one container per number of concurrent executions (= multiple launches of the same lambda at the same time) running only for a certain period of time. This so called “warm” time is around 30-45 minutes, after which the unused container(s) shut off and have to start up again, making a “cold start”. [17] This is generally up to 1 second long nowadays (many language comparisons and lambda CPU/RAM comparisons around the internet), but if you need absolutely minimal response time it can make a difference. Ways around this are provisioned concurrency (where for a price you can have AWS maintain a number of containers “warm”)[18], or pinging the lambda with a conditional function clause every N minutes. [19]

Service quota limits are (mostly) Region specific per-account limits that protect AWS customers from unintended spending. [20] By default, the lambda has a concurrent (“number of simultaneously processing functions”) limit of 1’000 that is account-wide irrespective of the lambda that is used. [21] In other words, if you have 2 lambdas that are constantly processing 500 events simultaneously, you’ll end up hitting the limit on the 501’th simultaneous event on either lambda. For an account with possibly multiple applications all using AWS Lambda, this means the other application may cause errors for the other. This limit can be raised via a support ticket. [22]

AWS Lambda is a great utility for many use-cases ranging from highly scalable background processing to low-cost serverless APIs, but it requires some planning ahead and benchmarking of concurrent executions (especially in event-driven architectures where the number of concurrent events may become very high). It can complement persistently (24/7/365) running EC2 services and ECS tasks, or replace them altogether depending on the scenario.

Notes

- EC2 Virtual Machines are the underlying infrastructure whether you use them or not.

- ECS Services can utilize your own EC2 “Container Instances” (cheaper-per-CPU-hour, but maintenance overhead and running 24/7 instances may turn out more expensive). Alternatively let AWS take care of infrastructure using Fargate (more expensive per CPU hour, but no maintenance and only pay per use).

- Lambdas allow you to run short (up to 15 minutes duration) functions, based on events. Similarly to Fargate you only pay per use, but concurrent scaling (based on the number of simultaneous events) is automated.

References

2 Elastic Load Balancing features (aws.amzon.com)

3 Availability Zones (aws.amzon.com)

4 Schedule Expressions for Rules (docs.aws.amazon.com)

5 Create a Compose File (docs.aws.amazon.com)

6 ECS integration architecture (docs.docker.com)

7 Auto Scaling group capacity providers (docs.aws.amazon.com)

8 Private registry authentication for tasks (docs.aws.amazon.com)

9 Authenticating with Docker Hub for AWS Container Services (aws.amazon.com)

10 Pricing with Savings Plans (aws.amzon.com)

11 Working with AWS Lambda proxy integrations for HTTP APIs (docs.aws.amazon.com)

12 Using AWS Lambda with Amazon CloudWatch Events (docs.aws.amazon.com)

13 https://github.com/DefinitelyTyped/DefinitelyTyped/tree/master/types/aws-lambda

14 AWS Lambda in Production: State of Serverless Report 2017 (New Relic Blog)

15 What AWS Lambda’s Performance Stats Reveal (The New Stack)

16 Lambda deployment packages (docs.aws.amazon.com)

17 AWS Lambda execution environment (docs.aws.amazon.com)

18 New for AWS Lambda – Predictable start-up times with Provisioned Concurrency (aws.amzon.com)

19 https://github.com/robhowley/lambda-warmer-py

20 AWS service quotas (docs.aws.amazon.com)

21 Managing AWS Lambda Function Concurrency (aws.amzon.com)

22 Lambda quotas (docs.aws.amazon.com)

This was the second blog post in a series of three posts on setting up your own application infrastructure on AWS. Next up, Storage! Follow and stay tuned!

Read the first part of the series:

ABC of Application Architecting on AWS – Series – Part A: Networks